- Financial Services

dAppForge Streamlines Blockchain Coding on Polkadot with Agentic AI

dAppForge partnered with Neurons Lab to create an agentic AI system that simplifies smart contract development, reduces risks, and ensures adherence to industry standards.

Partner Overview

Polkadot is a next-generation blockchain protocol connecting multiple specialized blockchains into a unified, scalable network. It enables cross-chain transfers of any type of data or asset, not just tokens, thereby unlocking a wide range of real-world use cases. Neurons Lab collaborated with dAppForge to develop an agentic AI Copilot that enhances smart contract development, addressing key challenges faced by financial institutions in the blockchain space.

In the financial services industry, Polkadot facilitates secure, efficient, and compliant transactions, making it an ideal platform for banks, capital markets, and insurance companies looking to leverage blockchain technology. A pioneering blockchain platform, Polkadot plays a critical role in modern financial services, especially within capital markets.

Financial institutions leverage Polkadot’s network for secure and efficient transactions, asset tokenization, and decentralized finance (DeFi) applications.

Project Overview

Financial institutions require robust smart contracts for a variety of applications, including automated trading, asset management, and settlement processes.

However, developing these contracts on the blockchain poses challenges in terms of complexity, security, and compliance with financial regulations.

Neurons Lab created an agentic AI system that simplifies smart contract development, reduces risks, and ensures adherence to industry standards.

Here is a presentation outlining the solution benefits from Christian Casini, dAppForge co-founder and Polkadot Blockchain Academy Founders Track graduate with distinction:

Business Challenges

Financial institutions operating in capital markets face significant challenges when developing smart contracts:

- Complexity and Expertise Gap: Smart contract development requires specialized knowledge, leading to longer development cycles and higher costs.

- Security Risks: Vulnerabilities in smart contracts can result in financial losses, fraud, or regulatory penalties.

- Regulatory Compliance: Ensuring that smart contracts comply with financial regulations is critical to avoid legal issues and maintain trust with stakeholders.

Solution

To address these challenges, Neurons Lab developed an agentic AI solution powered by advanced machine learning models using Amazon SageMaker. The innovative LLM-powered AI agent assists developers by:

- Automating Code Generation: Generates smart contract code snippets based on financial industry templates and best practices.

- Security Enhancement: Integrates real-time security analysis using Amazon Inspector and AWS Security Hub to identify and mitigate vulnerabilities.

- Compliance Integration: Incorporates regulatory compliance checks against standards leveraging AWS Config for continuous compliance monitoring.

- Secure Key Management: Employs AWS Key Management Service (KMS) to manage encryption keys securely, protecting sensitive financial data within smart contracts.

Architecture

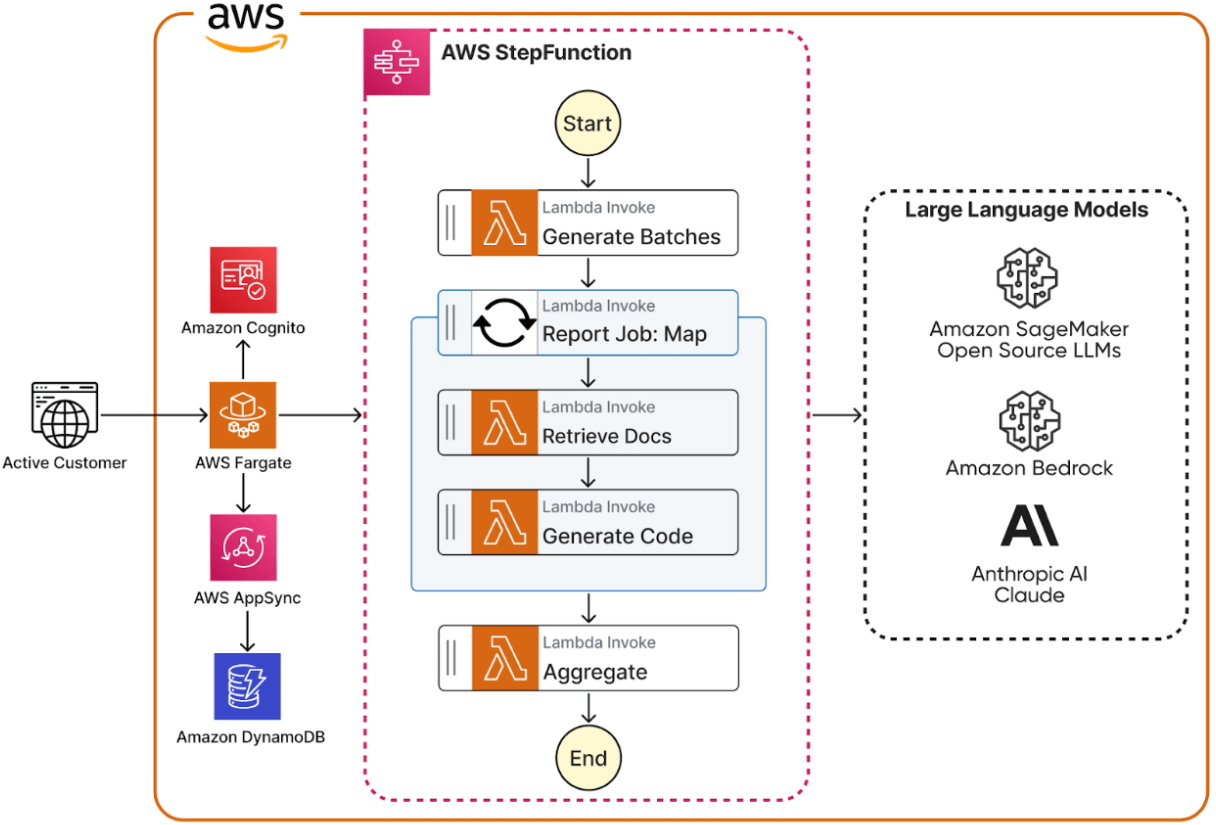

AWS architecture diagram

AWS architecture diagram

The system of agents’ architecture comprises several AWS services working together to provide a secure, scalable, and compliant solution:

1. Machine Learning Model Development:

- AWS SageMaker is used to build, train, and deploy the AI models that power the copilot.

2. Code Generation and Analysis:

- AWS Lambda functions process user inputs and generate smart contract code.

- Amazon API Gateway facilitates secure API calls between the user interface and backend services.

3. Continuous Integration/Continuous Deployment (CI/CD):

- AWS CodeCommit stores the code repositories securely.

- AWS CodePipeline automates the build, test, and deployment phases.

4. Security and Compliance:

- AWS Identity and Access Management (IAM) manages user permissions and access controls.

- AWS KMS encrypts sensitive data and manages encryption keys.

- AWS Config and AWS CloudTrail provide configuration monitoring and logging for compliance and auditing purposes.

5. Monitoring and Logging:

- Amazon CloudWatch monitors application performance and resource utilization.

- AWS Security Hub aggregates security findings to ensure compliance with industry standards.

6. Security and Compliance Measures:

- Data Encryption: All data at rest and in transit is encrypted using AWS KMS, ensuring compliance with financial regulations.

- Access Control: AWS IAM policies enforce the principle of least privilege, restricting access to sensitive resources.

- Compliance Monitoring: AWS Config continuously assesses resource configurations for compliance with regulatory standards.

- Audit Logging: AWS CloudTrail records all API calls, providing a detailed audit trail necessary for regulatory compliance.

The tech stack provided by AWS Cloud included AI infrastructure, foundational models, and tools leveraging two main forms of data:

- Documentation from websites about how to write code, plus best practices

- GitHub code repository based on real projects

The stack extracts, processes, and feeds the data into the model and builds an AI orchestration, creating a retrieval augmented generation (RAG) system and a Knowledge Graph (KG).

With context from actual data and projects, the vast KG powers the RAG to retrieve better contextual information, enabling it to generate more accurate responses and reducing the frequency of AI hallucinations.

In addition, the application layer has the copilot itself with a code debugger and optimizer, capable of making suggestions and completing code.

In terms of data collection:

- From the GitHub code repository, the solution parses the data, filters out specific file extension types, processes them, and creates document objects, removing any duplicates before creating a triplet. The triplet structure includes two connected nodes, a code description text, and sample code.

- In the document process pipeline, a website scraper detects URLs, parses text and code, converts it into document objects, removes duplicates, and feeds it into a triplet structure, a text-to-text relation with no code.

The code generation module has a KG construction process. The LLM quickly generates triplets based on preset prompts. Once generated, the module indexes the triplets and converts them into the KG.

knowledge graph

knowledge graph

After the user provides contextual code and submits it, in the search and retrieval phase, the system takes the query and searches the KG for the relevant information. Then it creates a sub-graph, a small representation of entities, nodes, and their relations relevant to the query.

Next, the code generation module generates the right code structure based on the sub-graph, dictated by a preset prompt to design the code so that it appears correctly. After generating the code, the system scores it:

- If the score is good: The system sends it to the user.

- If the score is bad: The system performs a more in-depth search of the KG, updates the context length, and repeats the search and retrieval until the score improves. When the code receives a good score, the system sends it back to the user.

This solution provides developers with more relevant and up-to-date code structures in a new industry with few best practices and examples widely available. It provides developers with better code, helps them to write code faster, and take inspiration from industry experts, growing the community.

Results

Neurons Lab extensively tested the performance of this KG+LLM model and saw truly remarkable results.

- 50% Reduction in development time: The AI Copilot streamlined the smart contract development process, reducing time-to-market for financial products.

- 30% Decrease in security vulnerabilities: Automated security checks and best practices reduced code vulnerabilities.

- 40% Improvement in compliance adherence: Integrated compliance checks ensured smart contracts met regulatory requirements.

In these comprehensive assessments, participants interacted with the RAG application and provided feedback based on three distinct levels of performance, compared to an LLM model without a KG:

- Success: Instances where the model excelled in delivering accurate results.

- Failed: Scenarios where the model struggled to generate satisfactory outcomes.

- Partial: Situations where the model’s performance was only partially correct.

The KG + LLM model increased the success rate by an incredible 34.75% compared to the isolated LLM approach. It also showed a remarkable 54.62% decrease in partial successes, indicating more definitive outcomes. The failure rate also increased, demonstrating the model’s decisive nature in handling complex tasks.

KG + LLM:

- Success Rate: 41.18%

- Failed: 41.18%

- Partial Success: 17.65%

Vanilla LLM:

- Success Rate: 30.56%

- Failed: 27.78%

- Partial Success: 38.89%

I received a new LLM version to test every two weeks, which was terrific. The speed and efficiency demonstrate how quickly you work and meet my needs. The team was incredibly responsive, even on weekends. I appreciate that I received prompt answers whenever I reached out on Slack.

About us: Neurons Lab

Neurons Lab delivers AI transformation services to guide enterprises into the new era of AI. Our approach covers the complete AI spectrum, combining leadership alignment with technology integration to deliver measurable outcomes.

As an AWS Advanced Partner and GenAI competency holder, we have successfully delivered tailored AI solutions to over 100 clients, including Fortune 500 companies and governmental organizations.

Explore Related Case Studies

Together with front-runners in Financial Services, Retail, Technology, and Telco & Media, we've built success stories that drive measurable impact. Get inspired by more stories below.

Your Path to Enterprise AI Starts Here