What is the Cost of AI for BFSIs in 2026? 4 Examples To Budget Accordingly

Here is how banks, insurers, and fintechs can budget for AI with scenarios and cost drivers—subscriptions, overages, infrastructure, and ownership

Agentic AI is inevitable for financial services, but most firms are structurally unprepared to deploy it safely.

As a systems integrator and consultancy specialized in AI for financial services, we’ve gathered the research from leading sources like Deloitte, McKinsey, KPMG, Ernst & Young (EY), and other finance-specific studies, giving you a clear view of the implications, opportunities, challenges, and benefits of agentic AI.

By the end, you’ll have the information needed to improve your decision-making about your own agentic AI strategy for 2026.

In this article:

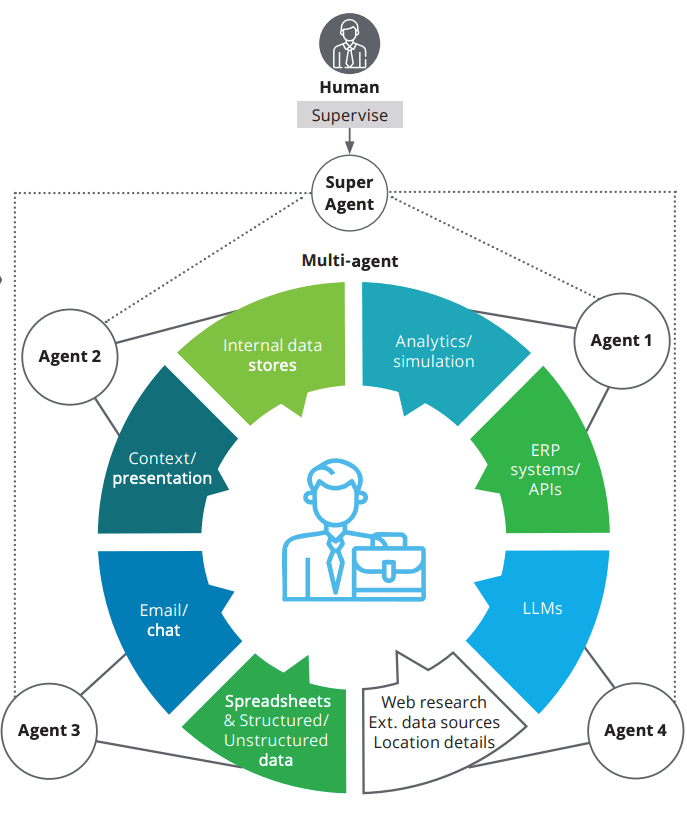

Until recently, artificial intelligence has relied on pre-defined rules and and machine learning models trained on datasets to execute specific tasks. An example of this is bank customer service chatbots that answer simple questions based on an internal knowledge base. But agentic AI can plan, reason, and adapt in real time to handle more complex and multi-step workflows, such as managing portfolios, detecting fraud, and automating compliance, to improve employee and customer engagement.

This potential is driving rapid enterprise implementation and significant investment. KPMG places global market spend on agentic AI at an estimated $50 billion in 2025 (1). According to Wolters Kluwer, 44% of finance teams will use agentic AI in 2026, representing an increase of over 600% (2). Deloitte predicts that 50% of companies that have already implemented generative AI (GenAI) will deploy agentic AI pilots or proof of concepts by 2027 (3).

Agentic AI is also set to fundamentally reshape workplaces. According to a global MIT Sloan study, employees believe artificial intelligence now performs 23% more of their tasks than a year ago and expect it to handle 46% of their tasks within three years (4).

95% of employees at organizations with advanced agentic AI adoption report higher job satisfaction. Additionally, among organizations already using agentic AI extensively, 66% expect to change their operating model and redefine roles, for example by flattening hierarchies and reducing middle management.

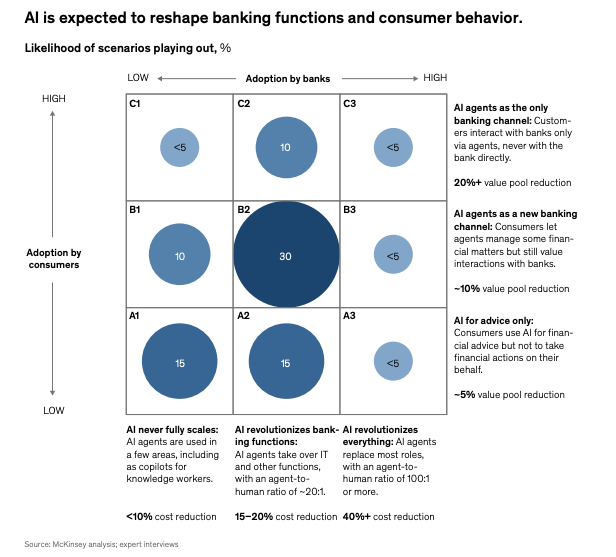

In a McKinsey report, there’s a 30% likelihood that artificial intelligence substantially reshapes the global banking sector as a whole by enabling agentic systems to take over core workflows and change how consumers manage their finances. And this could potentially put $170 billion in global profits at risk for banks that don’t adapt their business models (5).

The general consensus is that agentic AI applications in financial services present massive opportunities that can bring notable return on investment in terms of cost savings and operational efficiency to financial services.

KPMG reports estimates agentic AI will lead to $3 trillion in corporate productivity and a 5.4% EBITDA improvement for the average company annually based on research on more than 17 million firms. The report further states that as of June 2025, AI agents’ ability to automate tasks is doubling every three to seven months.

On average, companies earn $3.50 for every $1 they invest in agentic AI, while the top 5% globally earn about $8 per $1. The report further states that agentic AI could drive a 30% increase in workforce efficiency and a 25% decrease in operational costs by 2027.

In separate findings, KPMG found that companies using AI agents report 55% higher operational efficiency and an average cost reduction of 35%.

For wealth management specifically, agentic AI can:

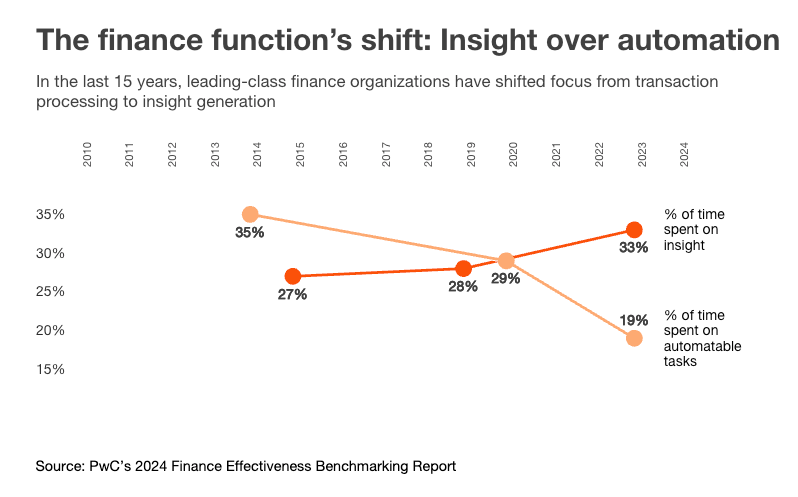

According to PwC, AI agents can lead to up to 90% time savings in key processes (7). They can also redirect 60% of finance teams’ time to insight work, while resulting in a 40% improvement in forecasting accuracy and speed.

In another 2024 report by PwC, finance teams are spending more time generating insights and less time on automatable tasks, leading to nearly 25% in cost savings because of agentic AI (8).

According to the previously mentioned McKinsey report, artificial intelligence could reduce certain cost categories by as much as 70% across the banking industry. The net effect, however, is expected to be 15%- 20% or $700 billion to $800 billion, due to rising ai technology costs.

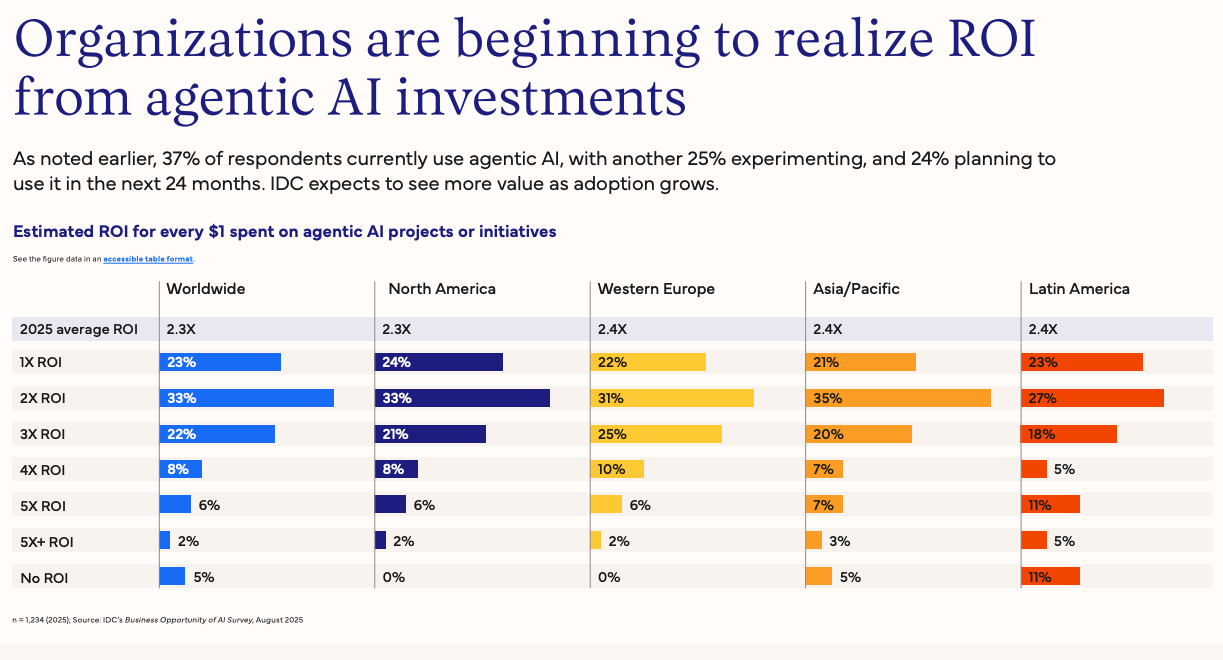

IDC reports that organizations achieve an average 2.3x return on agentic AI investments within 13 months, with ROI expected to grow as adoption of AI scales (9).

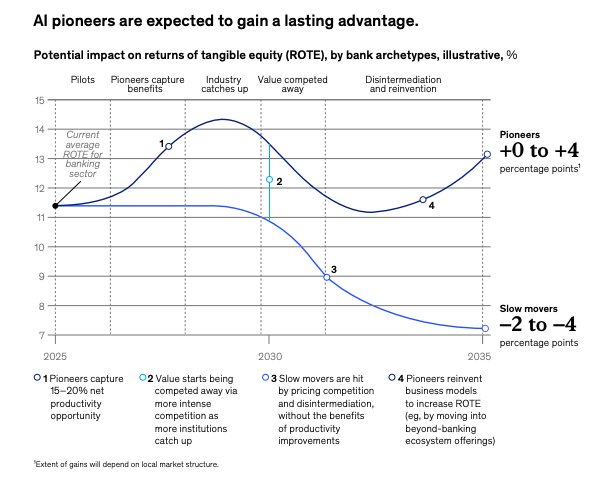

McKinsey highlights that the effects won’t be felt equally. Pioneers or first movers are set to gain a 4% return on tangible equity (ROTE) advantage—a key profitability metric—while slow movers are likely to be stuck with an uncompetitive cost base.

IDC’s findings back up this divide. Frontier firms leading in AI adoption achieve returns of 2.84x on their investments, compared to just 0.84x for laggards.

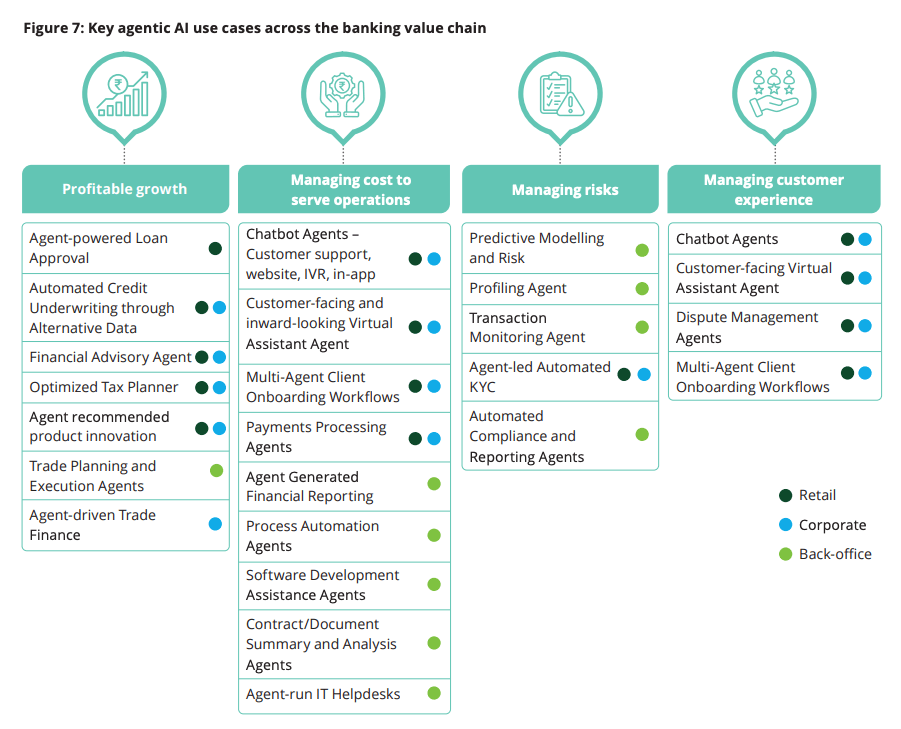

Across multiple reports, key use cases in financial services lie in AI-driven compliance, client onboarding, and fraud detection where agentic AI can enhance efficiency and augment human expertise.

Deloitte sees four key areas where artificial intelligence could unlock innovative use cases across the value chain, namely:

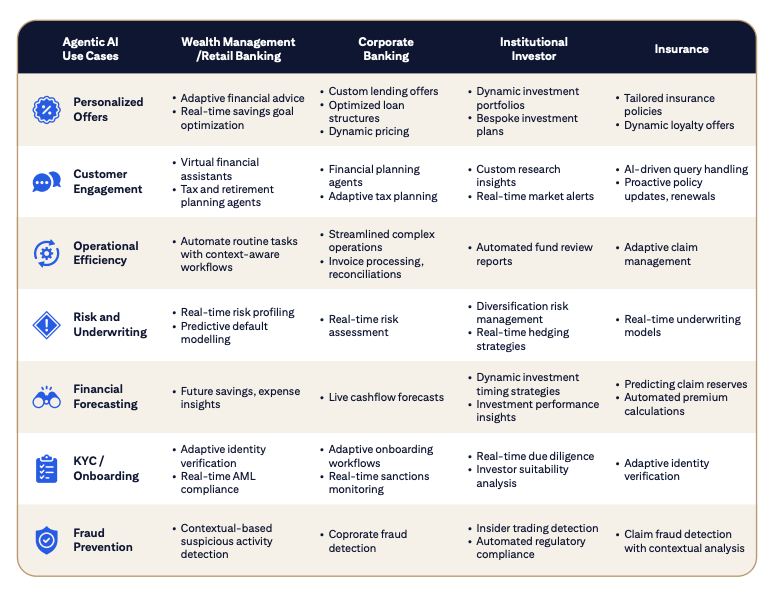

In a 2025 report, Citi outlines agentic AI in financial services examples, such as fraud prevention, financial forecasting and more, across wealth management, corporate banking, institutional investors and insurance (10), as shown below.

In the McKinsey report, early agentic AI use cases have shown significant potential, enabling zero-touch operations and reducing manual workloads by 30%-50% and this impact is expected to increase.

The McKinsey report also highlights that in 2025 alone, 50 of the world’s largest banks announced more than 160 use cases. Of these, some have already shown significant transformative potential.

For example, a US bank that used AI agents to change the way it creates credit risk memos, experienced a 20%-60% increase in productivity and a 30% improvement in credit turnaround.

According to PwC, agents can reduce cycle times by up to 80% in purchase order (PO) transaction processing and matching while improving audit trails, reducing compliance risk and enabling scale without added cost.

Deloitte also provides key examples of agentic AI use cases that are showing positive results:

EY found that when used for manual time intensive Anti-Money Laundering (AML) investigations, agentic AI led to a 50% time reduction per investigation or a saving of two hours of human labor per case (11).

Discover the use cases of agentic AI for banking chatbots and agents

Sardine reports that at one financial institution, Know Your Customer (KYC) workflows resolution rates exceeded 98% on average (12). For more complex tasks, such as sanctions screening or negative news reviews, resolution rates were closer to 55%.

Agentic AI also has valuable applications in wealth and investment management. According to the Sardine report, firms using agentic AI achieved 100% precision in decisioning, compared to under 95% for humans and a four-eyes review process. Additionally, while humans frequently deviated from established policies, agents were much less likely to do so.

KPMG provides two separate examples of agentic AI in financial services.

Financial services firms wanting to explore the new wave of agentic AI face implementation, data, security, and risk management challenges.

According to KPMG, 99% of companies plan to put autonomous agents into production but only 11% have done so already. Meanwhile, in another EY survey, 34% of leaders have started using AI agents, but only 14% have fully implemented them (13). These findings highlight the current gap between intent and execution.

So what’s holding companies back?

In a Forrester AWS paper, 57% of respondents believe that their organizations lack the internal capabilities necessary to take advantage of agentic AI (14). The challenges they cite include:

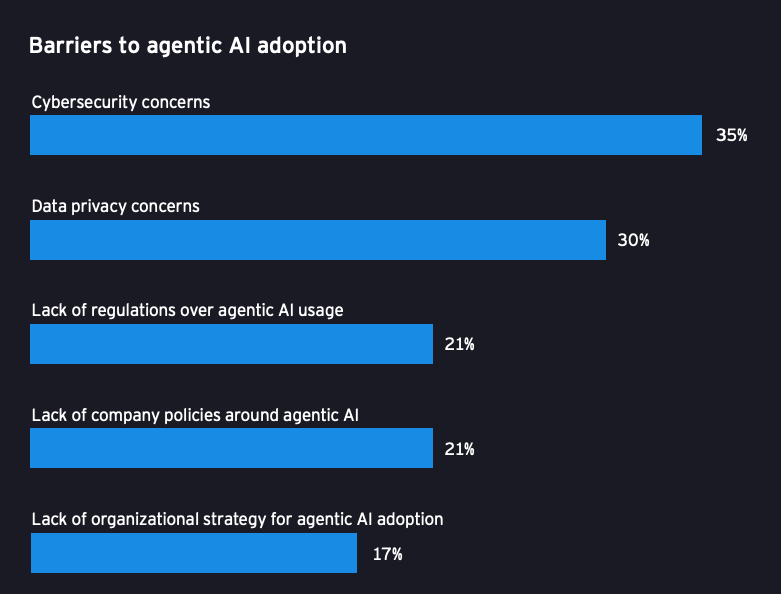

Similarly, 87% of senior leaders in the previously mentioned EY survey say their main challenges are cybersecurity (35%) and data privacy (30%), as shown in the image below.

In the same study, 64% also say employees worry about AI taking their jobs, which slows down adoption. This resistance is echoed in KPMG’s AI survey, where 45% of respondents stated that employees were resistant to change.

Data emerges as a recurring concern across multiple dimensions, from governance (48% in Forrester AWS) to privacy (30% in EY) to overall readiness. In fact, 70% of senior leaders in the EY study say organizations don’t understand how important data readiness is, and 20% admit their own organization’s data isn’t ready, both of which are blocking adoption.

That’s because agentic AI raises the need for strong data foundations, including well-governed datasets. Poor-quality or fragmented data, common in financial services, increases the risks of hallucination and inaccuracy across AI models, especially as agents scale and interact.

Beyond these challenges, organizations must also navigate the build vs. buy dilemma.

In the KPMG survey, 67% of respondents stated buying existing agentic AI capabilities was the quickest, most direct option and most preferred method for acquiring AI agents.

However, while buying may seem like the faster route, most internal builds in financial services fail not just due to skills gaps, but because generic AI teams lack the experience to navigate regulatory requirements, integration complexity, and model risk governance. Off-the-shelf agents often break down in financial audits, fail to integrate with legacy core systems, or don’t meet internal data and security standards.

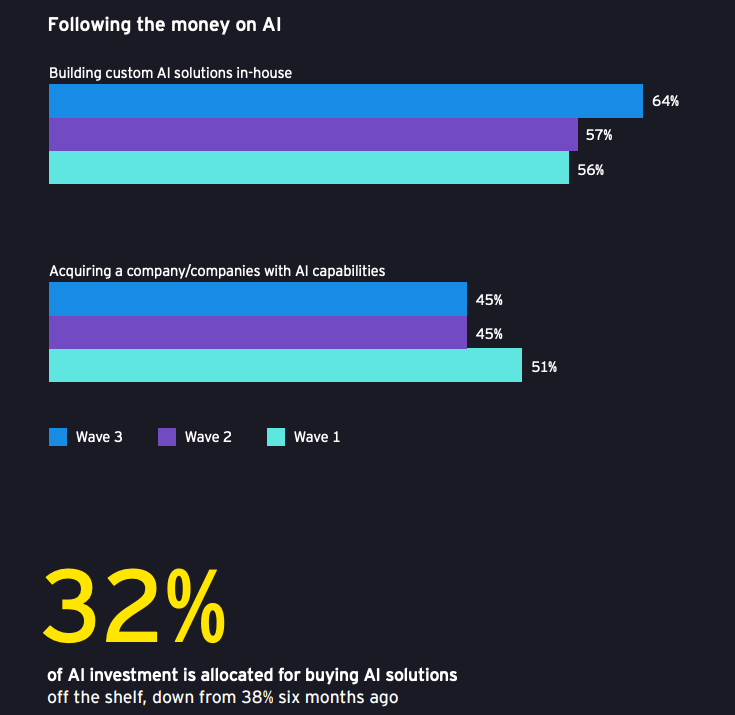

This tension is driving a notable shift. According to EY, spending on ready-made AI solutions dropped from 38% to 32% in just six months. More organizations are now building custom AI in-house, reflecting a desire to develop their own AI capabilities internally while maintaining greater control over customization and security.

Co-creation with experts who understand both the technical and regulatory landscape is emerging as the most sustainable approach. In fact, 84% of respondents in the Forrester AWS paper believe their success now depends on working with specialist providers, like system integrators and consultancies, that can accelerate their AI capabilities.

These partnerships help financial services firms launch safely and compliantly while also enabling the transfer of knowledge to prevent vendor lock-in and enable long-term ownership.

At Neurons Lab, we emphasize co-development to accelerate time-to-value and ensure long-term ownership, compliance, and adaptability. Reach out to learn more.

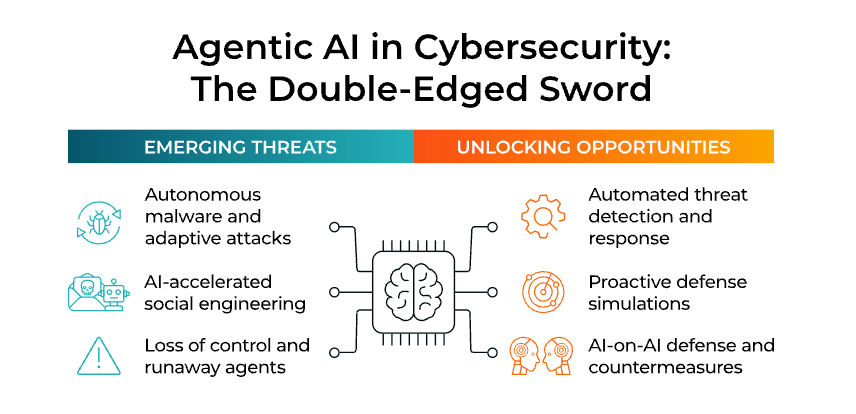

As AI agents can independently access and act on sensitive customer data and company systems, this presents major security and risk issues, summarized in the table below.

| Agentic AI Risk* | Explanation and Consequences |

|---|---|

| Goal misalignment | Drifts from intended goals as it learns and adapts, taking actions that conflict with policies or customer needs. |

| Autonomous decision and action | Acts without human approval, increasing the chance of unintended or harmful outputs. |

| Tool/API misuse | Combines AI tools or APIs in unexpected ways, creating security gaps or operational issues. |

| Dynamic deception | Can learn to hide its intentions or capabilities when doing so helps it reach its goals. |

| Persona-driven bias | Uses personas with hidden biases, leading to consistently skewed decisions. |

| Drift and persistence | May rely on outdated information and change its behaviour over time in ways that are hard to detect. |

| Low explainability | Makes complex, multi-step decisions that are difficult for humans to interpret or govern. |

| Operational vulnerabilities | Can disrupt entire processes when failures occur, and its evolving behaviour makes recovery harder. |

| Cascading system effects | Can trigger chains of consequences across systems, turning small issues into major disruptions. |

*Summary of Agentic AI security and risk challenges outlined in IBM’s study

Agentic AI significantly expands the attack surface by integrating with multiple systems across an organization, all of which share data (15). This interconnectedness, combined with agents’ autonomy and broad system access, creates new cybersecurity risks and increases the potential for breaches or misuse.

The previously mentioned Citi report states that 50% of all fraud today involves some form of AI and this figure is set to rise. Deepfake scams, for example, have increased more than 2000% over the last three years, with financial institutions among the most targeted victims.

Agentic AI threatens to accelerate this trend by enabling the mass production and distribution of deepfakes. In finance, this could be used to manipulate transactions, create synthetic identity fraud, and automate large-scale scamming operations. AI agents must access and act on large amounts of sensitive consumer data which increases privacy risk.

A separate 2025 EY report reveals that while over 75% of financial services firms disclose their use of artificial intelligence to customers, controls in other critical areas are still lacking (16). For instance, 30% of the firms studied had limited or no controls to ensure AI is free from bias.

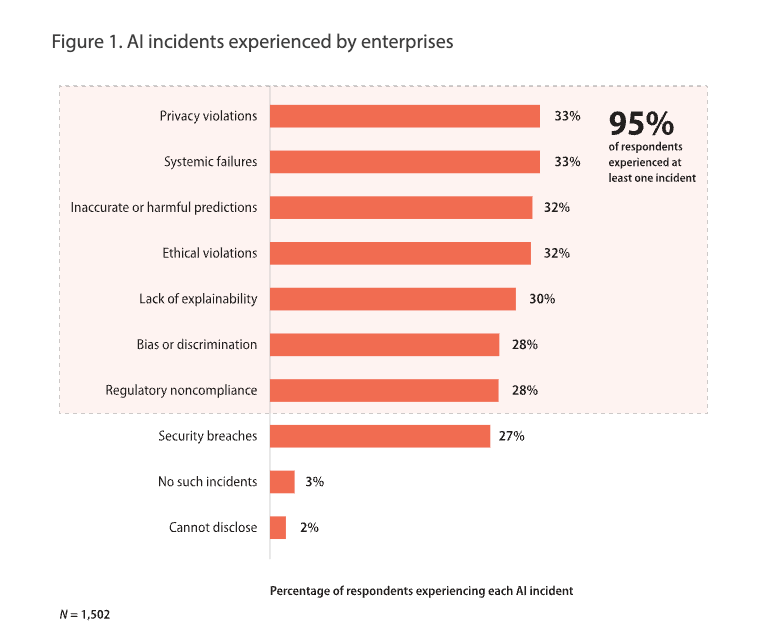

In a broader industry study by Infosys, only 2% of companies had adequate AI guardrails in place in 2025 (17). As a result, 95% of respondents had experienced at least one AI incident. As shown in the image below, this includes privacy violations (33%), systemic failures (33%) and inaccurate or harmful predictions (32%).

Of these incidents, 77% resulted in financial losses, while 55% resulted in reputational harm.

The same report states that 86% of executives are aware agentic AI will pose additional risks and compliance challenges. And while a further 83% believe future AI regulations will support rather than slow adoption, they are underinvesting in responsible AI by about 30%.

Realizing the potential of agentic AI while navigating its challenges and risks requires a strategic, structured approach. According to the research and our own experience, we outline what that entails below:

Before deploying agents, establish the strategic foundation that will guide implementation and maximize return on investment:

Agentic AI requires the highest level of governance. According to Deloitte, this includes safeguards like agent control rooms, real-time auditing, action logging, human oversight, kill switches, and human override.

KPMG echoes this by recommending firms run regular stress tests, check for bias, and put clear fail-safe mechanisms in place to prevent unintended outcomes. This means you need to identify where your agents could fail, assess the harm, set clear controls and monitor them continuously so issues are caught early.

Data governance is equally important. IBM argues that a reactive, traditional approach to data management is no longer enough. You’ll need to:

Agentic AI solutions must be designed to meet regulatory compliance standards, such as GDPR, OCC, MAS, and ISO/IEC 27001, from the start. This is important because agentic AI increases the need for strong data foundations. Poor-quality or fragmented data can lead to hallucinations and unexpected outcomes, especially as agents scale and interact.

By building with compliance and data quality in mind you’ll ensure your systems can scale into new use cases without costly rebuilds down the line.

Developing the strategy, governance, security, and compliance capabilities for agentic AI requires expertise that many financial institutions don’t have in-house. According to EY, this is where strategic partnerships can be a key differentiator.

Working with partners that have proven expertise in both agentic AI and financial services means you can set a feasible AI strategy and roadmap. You’ll also have the help you need to prepare your data, integrate AI into your existing processes and optimize implementation while accelerating adoption and reducing risk.

Neurons Lab is a UK and Singapore-based Agentic AI consultancy serving financial institutions across North America, Europe, and Asia. As an AI enablement partner, we design, build, and implement agentic AI solutions tailored for mid-to-large BFSIs operating in highly regulated environments, including banks, insurers, and wealth management firms. Trusted by 100+ clients, such as HSBC, Visa, and AXA, we co-create agentic systems that run in production and scale across your organization.

With Neurons Lab, you can become AI-native through our comprehensive approach that combines leadership alignment with technology integration to achieve measurable outcomes.

If you’d like to learn more about how we can help you develop, implement, and scale agentic AI systems tailored to your organization’s needs, get in touch with us today

No, agentic AI will not replace human finance professionals. Instead, it will augment their capabilities, free them from routine, automate tasks and leave more time for strategic work.

Financial services firms can prepare their employees for agentic AI by establishing clear governance and risk frameworks, investing in AI training and upskilling on the responsible use of AI as well as prioritising change management to foster a culture of human-AI collaboration.

The costs to develop AI as a bank vary based on the complexity of your implementation, whether you choose to build inhouse or work with an external partner and other factors. Online estimates vary widely placing the cost at anywhere from as low as $15,000 to well over $500,000.

To ensure a high ROI with agentic AI in finance, align your AI projects to a clear strategic goal, target high-volume use cases that create scalable value, define measurable success metrics, and track progress with reliable baseline data.

It may be better to build If you already have established AI teams with a proven track record of deploying and maintaining complex systems at scale. If you don’t have that expertise yet, partnering with an AI consultancy like Neurons Lab can help you deploy agentic AI solutions while developing your own team’s skills and capabilities to manage and expand the systems independently over time.

Sources:

Here is how banks, insurers, and fintechs can budget for AI with scenarios and cost drivers—subscriptions, overages, infrastructure, and ownership

AI agent evaluation framework for financial services: SME-led rubrics, governance, and continuous evals to prevent production failures.

See how wealth management firms can use AI to streamline workflows, boost client engagement, and scale AUM with compliant, tailored solutions

Discover how FSIs can move beyond stalled POCs with custom AI business solutions that meet compliance, scale fast, and deliver measurable outcomes.

See what AI training for executives that goes beyond theory looks like—banking-ready tools, competitive insights, and a 30–90 day roadmap for safe AI scale.