5 Global AI System Integrator Companies for Financial Services

See top global system integrator companies for AI in financial services, plus key criteria for governance, security, and production-ready delivery in 2026.

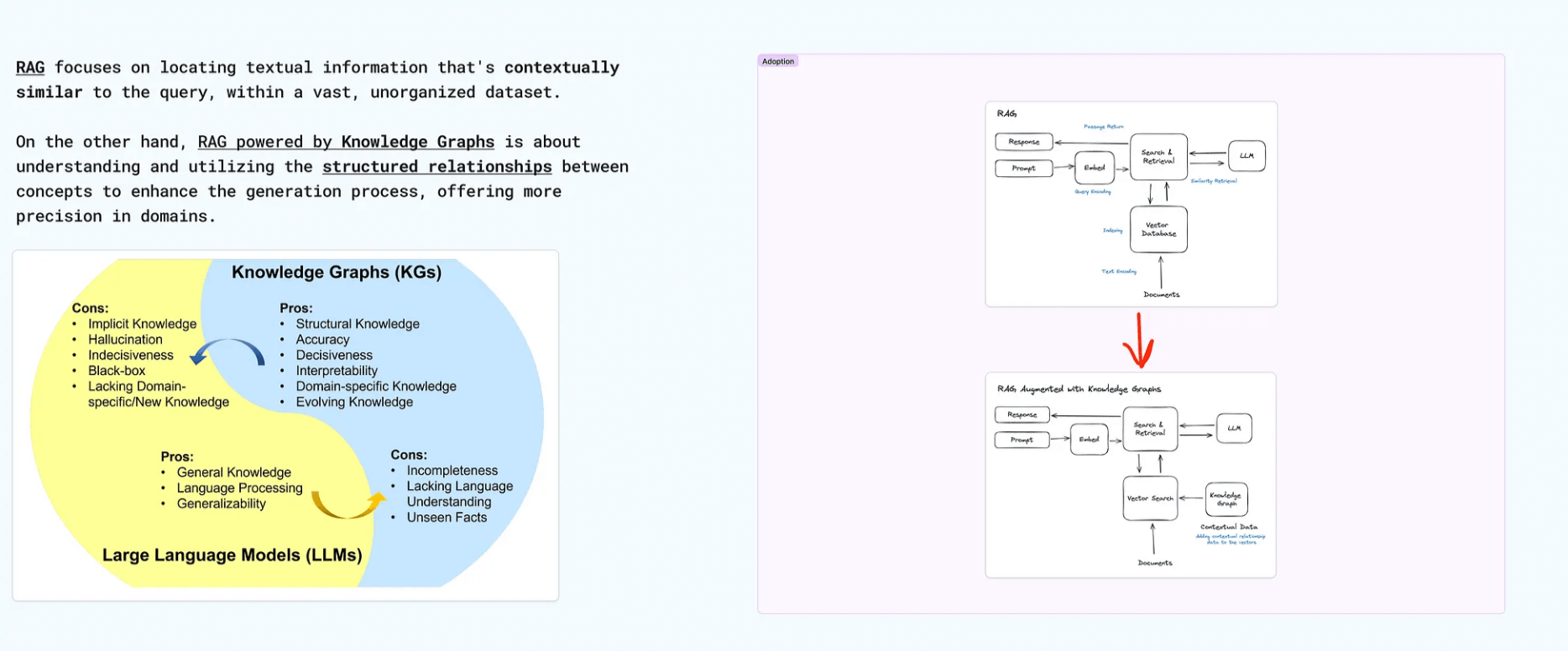

Integrating a Knowledge Graph (KG) with a retrieval-augmented generation (RAG) system creates a powerful hybrid known as G-RAG.

This integration enhances information retrieval, data visualization, clustering, and segmentation while mitigating issues like hallucination in LLMs.

As research and development continue, the potential for G-RAG systems to revolutionize various industries becomes increasingly apparent.

By leveraging the strengths of both KGs and RAG systems, we can create more accurate, reliable, and efficient AI solutions. The future of G-RAG is bright, promising significant advancements in how we retrieve, generate, and utilize information.

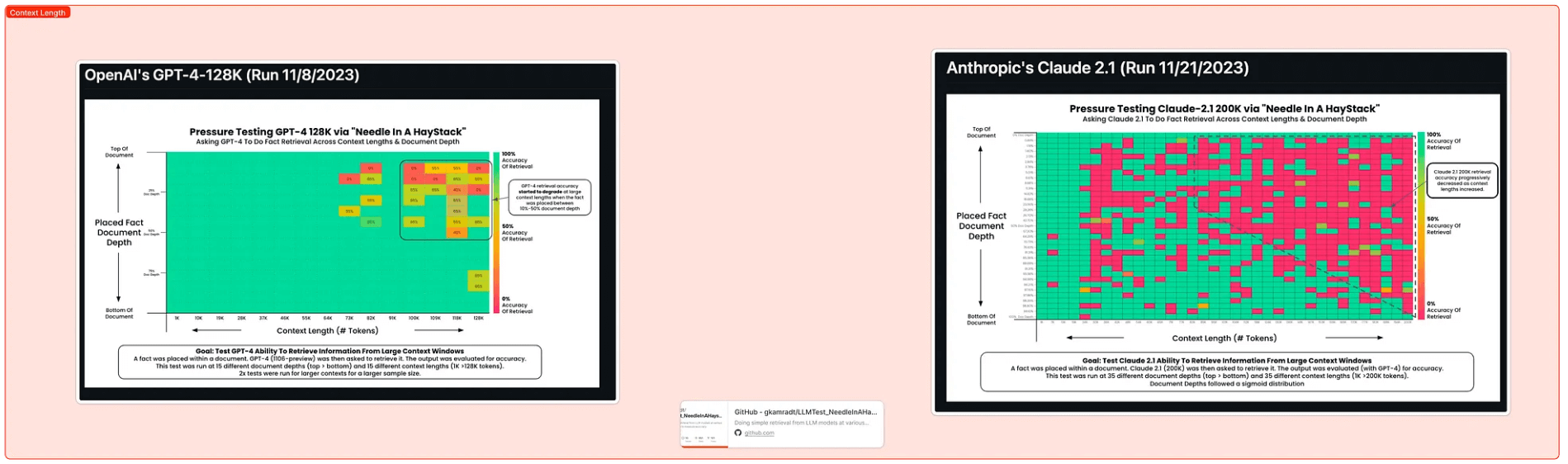

In the rapidly evolving landscape of artificial intelligence, enhancing the performance of RAG systems has become a significant focus for researchers and practitioners.

One promising approach to achieving this goal is integrating Knowledge Graphs (KGs) with RAG systems, resulting in a more powerful and efficient hybrid: G-RAG. In this article, I will explore how KGs can enhance RAG systems.

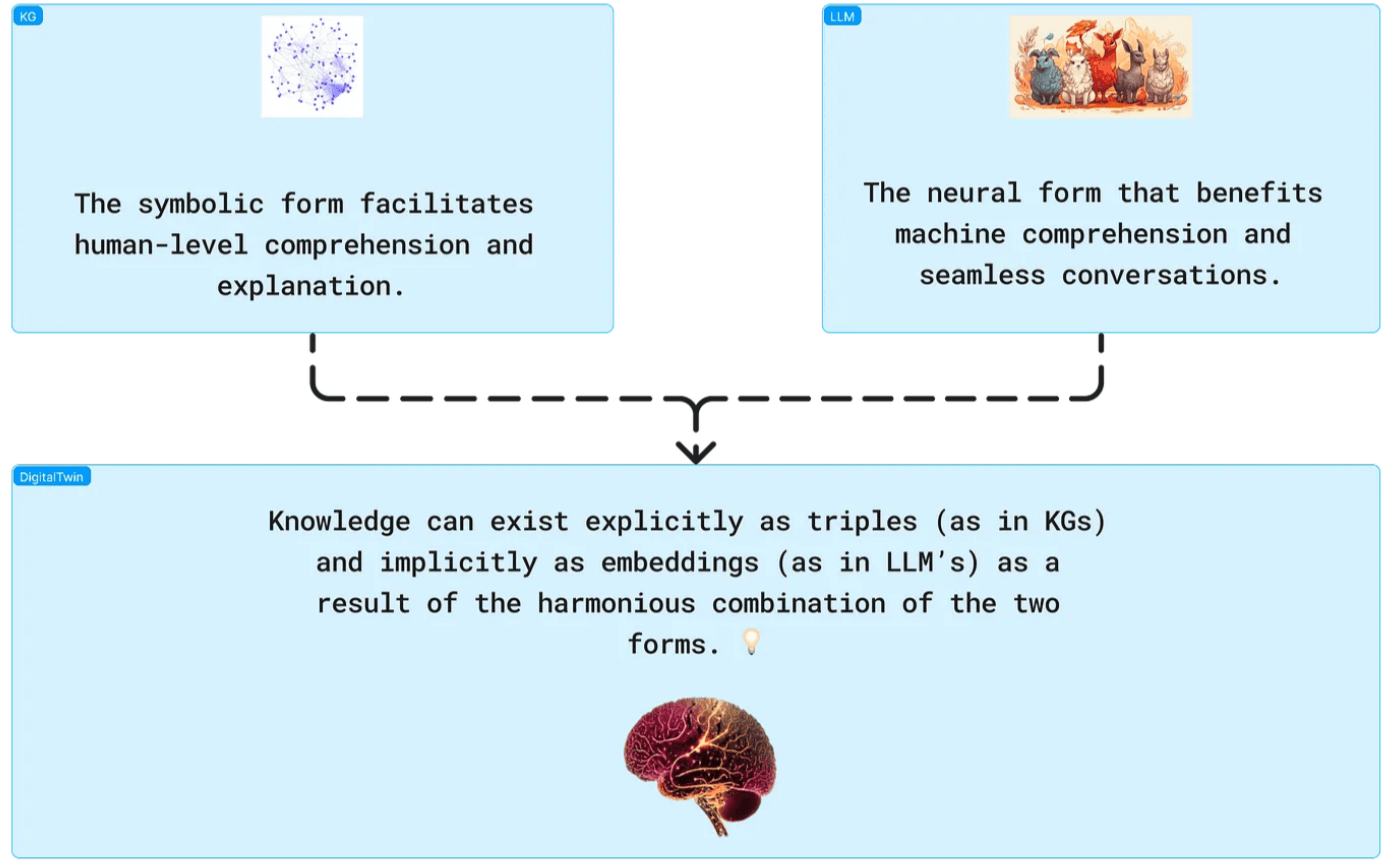

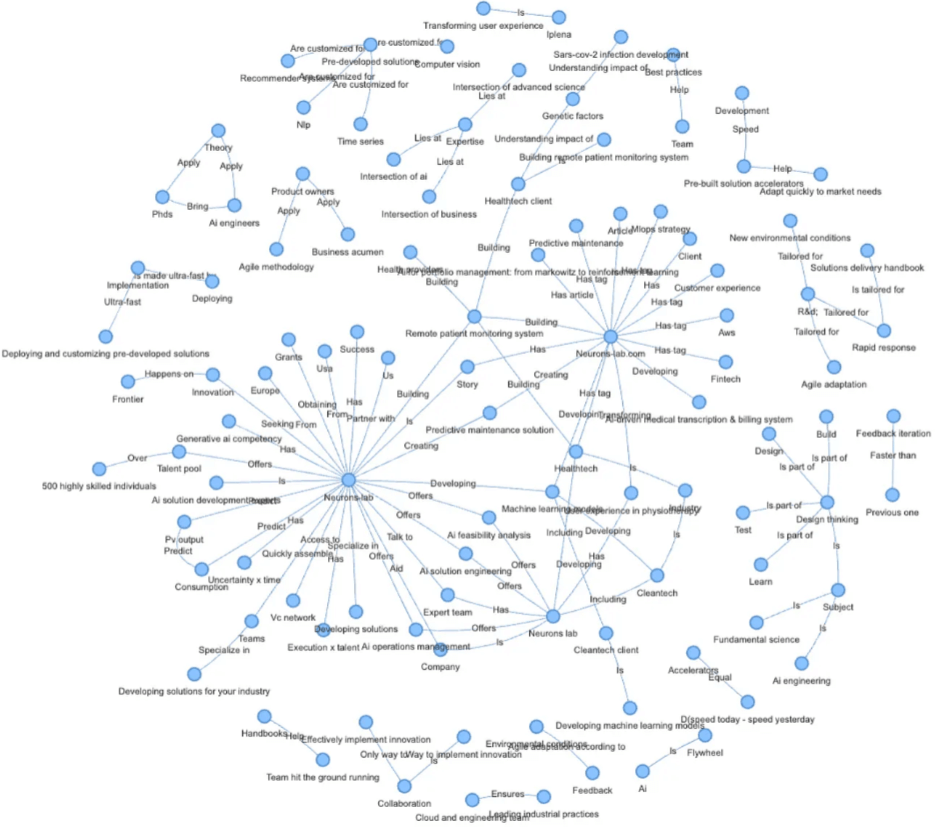

Knowledge Graphs are structured representations of knowledge, where entities are nodes connected by relationships (edges). They excel at capturing and organizing information in a way that is both human-readable and machine-actionable.

By integrating KGs with RAG systems, we can significantly enhance their ability to understand, retrieve, and generate relevant and accurate information.

One of the primary benefits of using KGs in RAG systems is their ability to expand the domain of information retrieval. By increasing the depth and breadth of nodes in a KG, the system can extract information from a more extensive and interconnected set of data points.

This expanded domain allows for more comprehensive responses and richer contextual understanding. For instance, by adjusting the parameters of the KG, such as the number of nodes and the depth of relationships, the system can extract a wider array of information.

This approach enables the RAG system to retrieve detailed and nuanced responses, going beyond the capabilities of traditional language models.

Through a series of rigorous evaluations, we extensively tested the performance of the LLM compared to the KG+LLM model, yielding truly remarkable results. In these comprehensive assessments, participants interacted with the RAG application and provided feedback based on three distinct levels of performance:

Vanilla LLM:

Success Rate: 30.56%

Failed: 27.78%

Partial Success: 38.89%

KG + LLM:

Success Rate: 41.18%

Failed: 41.18%

Partial Success: 17.65%

The KG + LLM model not only boosts the success rate by an incredible 34.75% compared to the Vanilla LLM, but also shows a remarkable 54.62% decrease in partial successes, indicating more definitive outcomes.

While the failure rate has increased, this indicates the model’s decisive nature in handling complex tasks.

Integrating KGs with RAG systems can also refine the process of information retrieval. By leveraging the structured nature of KGs, the system can provide more accurate and contextually relevant answers.

For example, when querying how to contact a company, the KG can supply precise contact details such as phone numbers and addresses, which may not be easily retrievable through standard LLMs.

This ability to extract and present factual information is crucial for applications that require high precision and reliability and hence helps fight against the hallucination problem. [55.43]

To appreciate how Knowledge Graphs (KGs) enhance Retrieval-Augmented Generation (RAG) systems, it’s essential to grasp the fundamental concepts of KGs, including triplet formation, graph neural networks (GNNs), and the roles of nodes and entities.

Triplets Formation

A Knowledge Graph represents knowledge as a set of triplets, each consisting of:

For example, “Neurons Lab (Head) is located in (Relation) Europe (Tail)” captures a specific fact.

Graph Neural Networks (GNNs)

Graph Neural Networks are specialized for graph-structured data, enhancing KGs by:

GNNs improve tasks like node classification and link prediction within KGs.

Nodes and Entities

In KGs:

Another significant advantage of integrating KGs with RAG systems is the improved capability for data visualization and analysis. Graph embeddings, which preserve the relationships and structure within a KG, enable sophisticated visualizations that can reveal patterns and insights not immediately apparent from raw data.

By plotting these sub-graphs or respective embeddings, we can see how different entities and their relationships are organized within the KG. This visualization helps in understanding the underlying structure and connections, making it easier to analyze and interpret the data. [57:20]

A well-known challenge in using language models is the issue of hallucination, where the model generates plausible but incorrect or nonsensical information. KGs can help mitigate this problem by providing a structured and factual basis for information retrieval and generation.

By setting parameters like temperature to zero, we can reduce the likelihood of hallucination but this is not enough since by default the nature of any LM is designed to predict the next token.

Where is in the context of KG, we are computing the most semantically similar relations and facts that exist in the KG and use that as the context for the LLM to limit its search spectrum which ensures that the generated responses are grounded in the factual data stored in the KG. [01:01:24]

The integration of KGs with RAG systems opens up exciting possibilities for future development. While effective prompting can enhance triplet generation (the creation of entity-relationship-entity structures), fine-tuning specialized models can further improve performance and quality.

Research in graph language models and graph representation learning is ongoing, with the potential to significantly advance the capabilities of G-RAG systems. By continuing to explore and develop these technologies, we can create more sophisticated and effective solutions for a wide range of applications.

Neurons Lab delivers AI transformation services to guide enterprises into the new era of AI. Our approach covers the complete AI spectrum, combining leadership alignment with technology integration to deliver measurable outcomes.

As an AWS Advanced Partner and GenAI competency holder, we have successfully delivered tailored AI solutions to over 100 clients, including Fortune 500 companies and governmental organizations.

See top global system integrator companies for AI in financial services, plus key criteria for governance, security, and production-ready delivery in 2026.

How structured training eliminates the hidden tax of "Shadow AI" and unlocks measurable ROI and agency for you and your team.

Agentic AI in financial services is inevitable. Learn key stats, use cases, and how to overcome data, risk, and adoption challenges in your 2026 strategy.

The best banking chatbots for banks in 2026 cover compliance, secure data integration, multi-step workflows, and AI systems built for regulated environments.

The best AI tools for investment banking help analysts automate research, streamline workflows, and improve decision-making across buy- and sell-side teams.