What is the Cost of AI for BFSIs in 2026? 4 Examples To Budget Accordingly

Here is how banks, insurers, and fintechs can budget for AI with scenarios and cost drivers—subscriptions, overages, infrastructure, and ownership

If you are asking how to build a multi-agent AI system, you’ve probably hit the limits of off-the-shelf tools like ChatGPT or Perplexity Finance that can’t execute multi-step decisions across teams, tools, and data silos. Most likely, you want to automate complex, regulated workflows, but are unsure if your in-house team knows how to design, secure, integrate and scale them.

But a multi-agent system isn’t the answer for every financial services use case.

As an AI-enablement company with experience implementing over 100 custom AI solutions across banking, insurance, and wealth, we know when a multi-agent system makes sense. In this article, we’ll help you understand if a multi-agent system is the right choice and how to design one with a secure, scalable architecture.

In this article:

Want to build a tailored, effective and compliant multi-agent AI system? Neurons Lab can help. Book a call with us today.

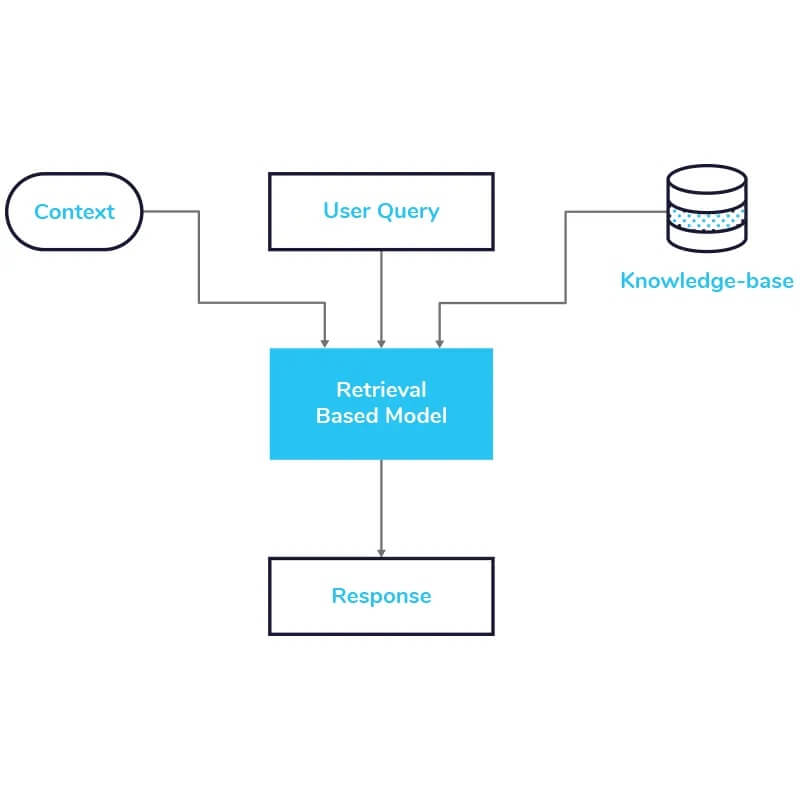

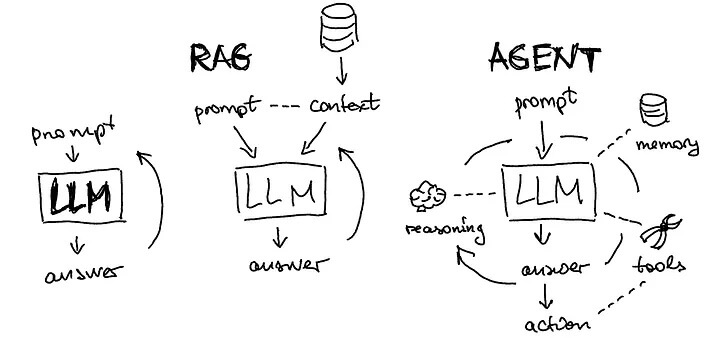

Before understanding how to build a multi-agent AI system for financial services, it helps to know when one is actually needed. If your goal is to cut costs by automating repetitive support tasks, a retrieval-augmented generation (RAG) model is usually enough.

However, when your AI must integrate data and actions from several systems (e.g., comparing products across banks, personalizing recommendations, or completing transactions) you need a multi-agent system to manage the broader, more complex customer journey.

Unlike a single-agent model that handles only isolated tasks like a FAQ chatbot, a multi-agent system distributes the complexity of multi-step workflows across a group of specialized agents. Each of these specialized agents performs a defined function and works together with the other agents to deliver precise, domain-specific outcomes.

This creates an “AI mind”—a coordinated AI system that can reason, make decisions, and use the right tools to execute complex workflows.

In financial services, these systems are valuable when the workflow crosses departments or combines rule-based and market-driven behaviors (e.g., comparing products across banks, executing transactions, managing insurance claims).

However, multi-agent systems also introduce higher complexity and stricter operational requirements, such as coordinating actions across risk, compliance, and product systems in real time. They should be considered only when a single-agent model or RAG-based approach can no longer meet your service, compliance, or integration needs.

Before designing a multi-agent AI system, it’s important to understand how your line of business shapes the system’s logic. Financial sectors differ in how predictable their operations are, and that difference determines how agents are designed, what data they access, and which tools they use.

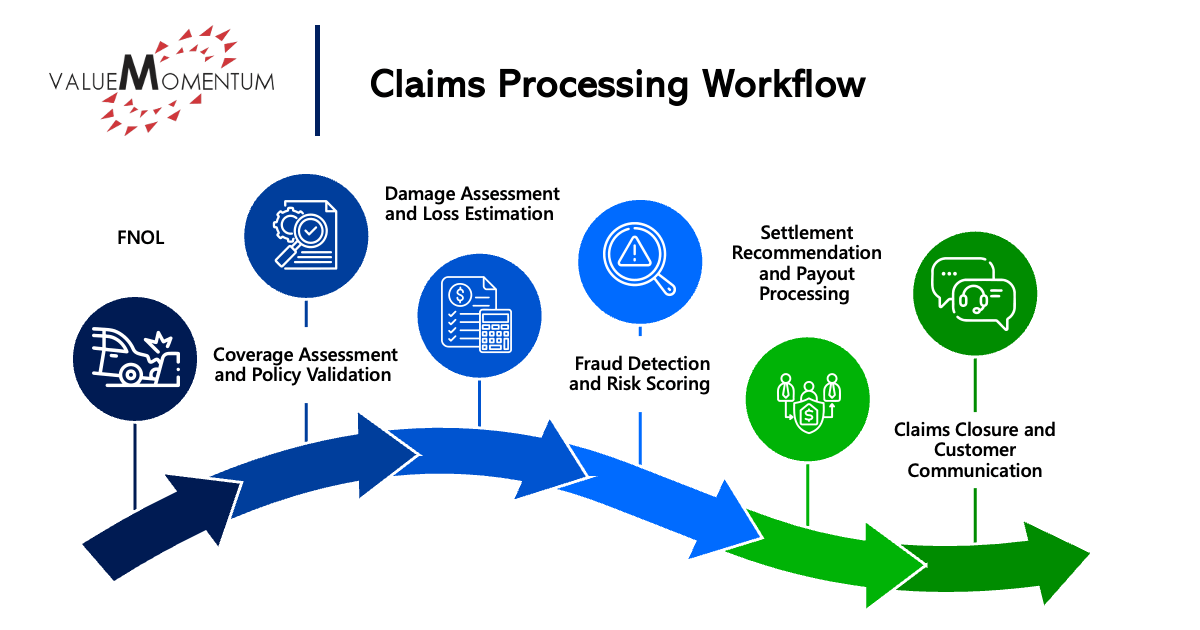

For instance, in insurance or retail banking, most operations follow rule-based logic. Policy rates, premiums, and loan terms are calculated using fixed formulas and compliance frameworks, producing predictable outcomes. While still complex multi-step workflows, these are deterministic systems, meaning that if you always use the same data, you’ll always get the same result.

A simple FAQ chatbot workflow – Image source: Medium

Take an insurance claims processing agent as an example. They need to validate claim eligibility, calculate reimbursement based on policy rules, and route the case to human review if it exceeds a certain threshold. They will always produce the same output when given the same input.

An example of a claims processing workflow – Image Source: Value Momentum

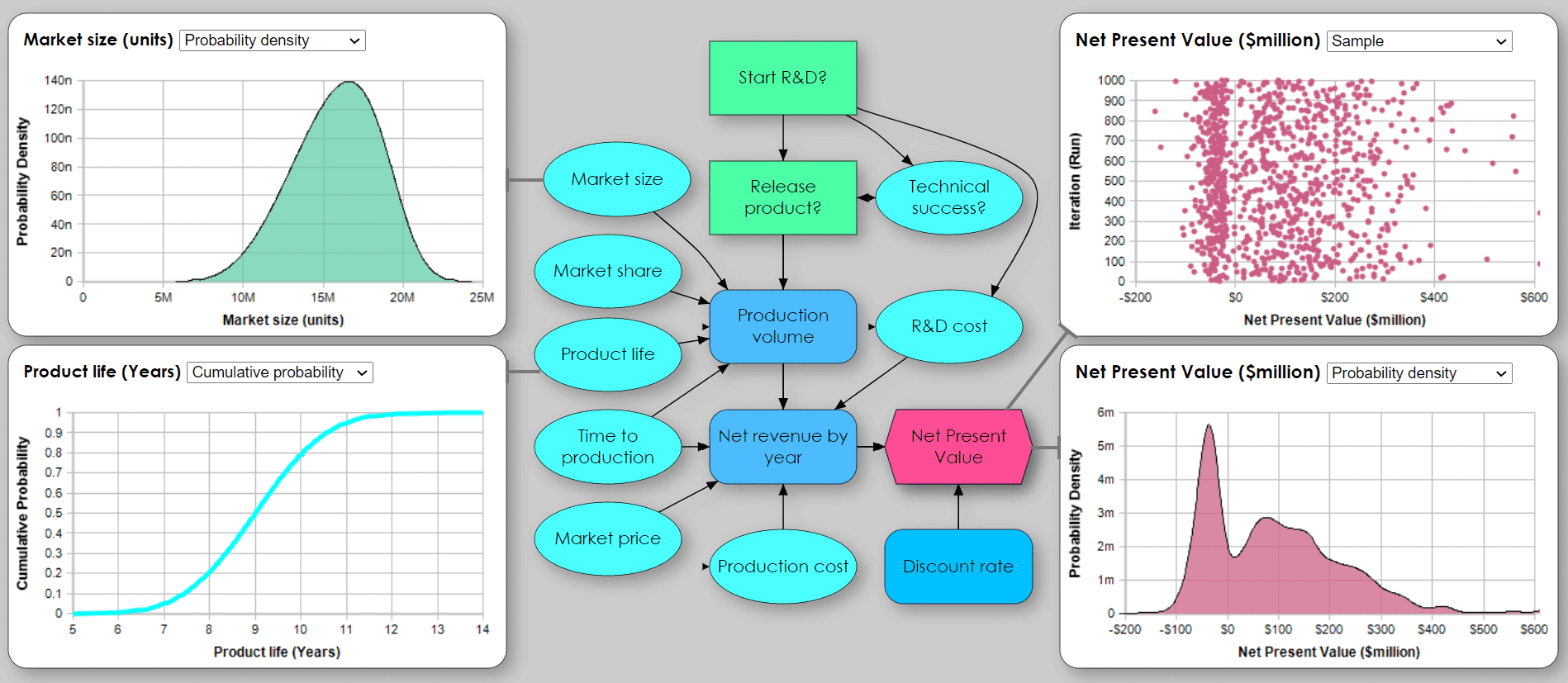

But sectors like wealth or asset management are different. Here, outcomes are unpredictable because they depend on variables like financial markets that fluctuate and can’t be controlled. These are nondeterministic systems, where the same input can produce different results depending on changing market conditions, timing, or investor behavior.

This distinction between deterministic and nondeterministic is crucial when building multi-agent architecture because it defines how you design your agents, what data they access, and which services/tools they use to arrive at reliable outputs:

For example, if a customer asks what would happen to their portfolio if they bought gold, a multi-agent design can’t rely on an out-of-the-box LLM tool like Perplexity Finance or surface-level web research. Pulling opinions from articles or analysts isn’t enough. What’s needed is a rigorous quantitative analysis of how a gold allocation would affect the customer’s unique portfolio.

To provide the best analysis possible, the multi-agent system needs access to:

A Monte Carlo simulation model – Image source: Lumina Analytica

Once you understand whether your workflows operate in a deterministic or nondeterministic context, you can begin to think about selecting the right architecture, data sources, and reasoning engines to deliver consistent, high-confidence outcomes. In the next section, we explore our approach in building these systems for financial services.

Discover more about agentic AI in financial services

Once you decide a multi-agent system is the right choice and understand the level of complexity required, how do you start? We take a two-pronged approach that sets up boundaries before building your technical layer.

Financial services adds more complexity than most industries and includes legacy infrastructure, fragmented tools, and strict regulatory standards like GDPR, FINRA, and regional banking supervision. It also requires the capability to scale systems across thousands of users and transactions.

This environment demands a stronger governance framework before any AI model or agent goes live. Governance sets the limits of each agent’s authority and ensures compliance, security, and traceability across every workflow.

This means defining clear rules for data, services, and reasoning.

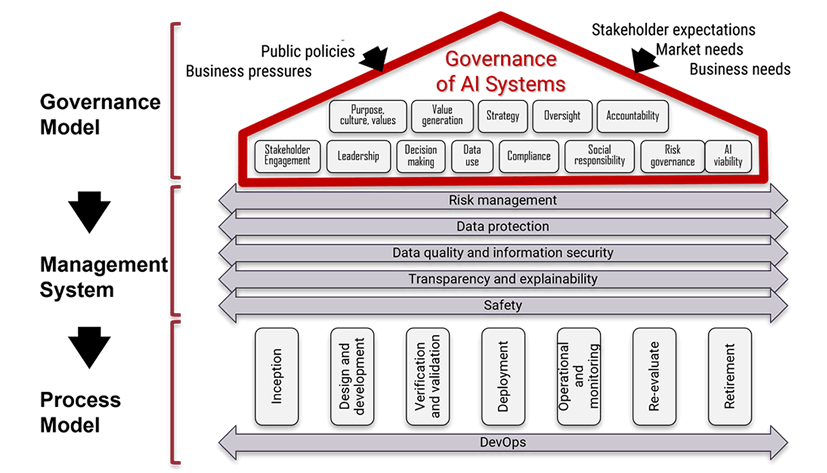

An example of AI governance framework – Image source: AI Governance

A well-designed governance layer prevents high-risk failures, such as data leaks, hallucinated answers, and unauthorized actions, that can quickly erode customer trust and breach regulatory standards. It also provides the foundation on which to build a reliable technical architecture (which we’ll get to in the next stage).

Here is how to build each layer of governance and why:

Multi-agent systems rely on a range of data sources that, without the right restrictions, risk exposing sensitive client and internal information and violating regulations. Agents may also act on the wrong data, leading to inaccurate and unreliable outcomes.

Governance defines how agents access, use, and store information, which is critical under data-specific regulations like GDPR, CCPA, and EBA Guidelines. Governance also ensures that models draw only from verified data and that each action can be traced and audited.

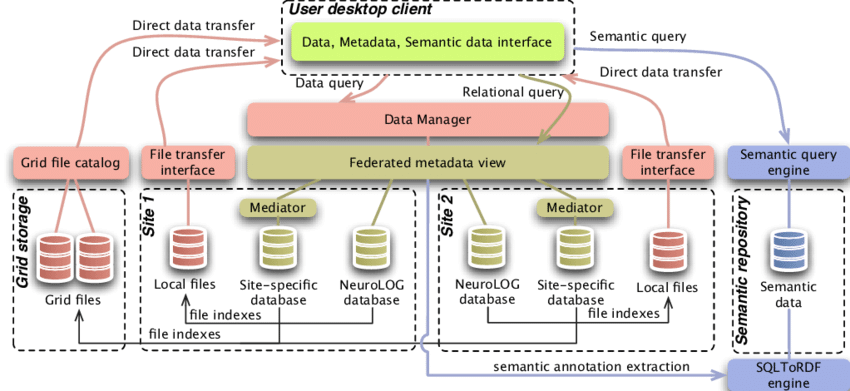

Caption: An example of a data governance framework – Image source: ResearchGate

The three main data types to manage are public, private and customer-level information:

When handling the data above, AI access should follow clearly defined permission levels. Each AI agent should see only the data fields required for its specific task. This level of granularity keeps data use compliant, limits exposure, and ensures that every action can be explained and audited.

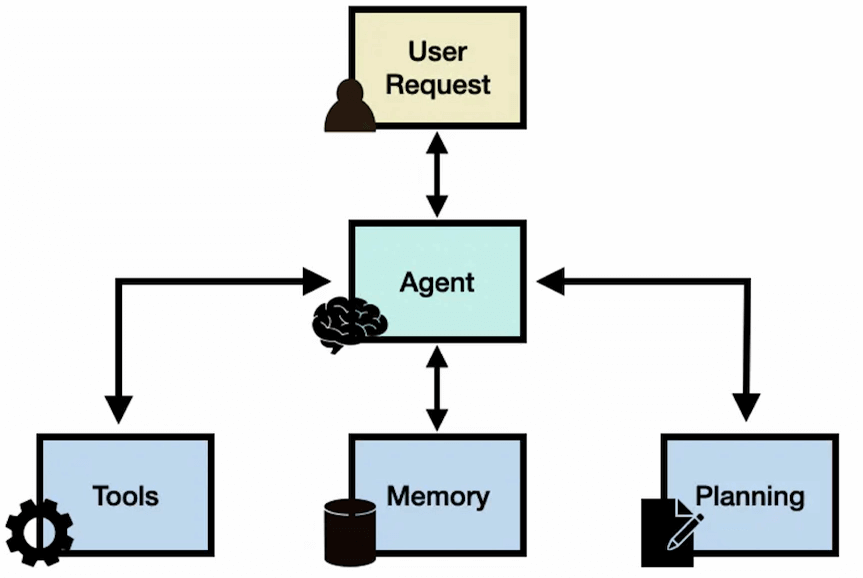

Agents build on the foundation of LLMs by adding reasoning, memory, tool use, and the ability to take action in real-world systems. Image source: Alex Honchar on Medium

To perform complex tasks, agents need to access services (also called tools). In financial institutions, these services include existing digital systems like payment or claims processing, policy management, customer registration, and trading.

Service governance defines which agents can use which systems and what specific actions they can perform. Each permission must be clearly documented, monitored, and reviewed so that each agent focuses only on its assigned role. This reduces errors, prevents unauthorized actions, and ensures every action can be traced, justified, and safely reversed if needed.

For example, a payments agent may access the payments database but never the customer onboarding system. Similarly, a compliance agent might connect to transaction logs for audit purposes but cannot interact with live payment systems. This prevents cross-agent interference and potential compliance breaches or fraud, and limits the scope of damage in case of errors.

The third layer of governance covers reasoning—how one agent routes tasks to another and decides what to do next. To understand how this fits into a multi-agent AI system, it helps to look at how each AI agent is structured and what elements shape its decisions.

Every AI agent, powered by a language learning model (LLM), operates through three core components:

Reasoning connects the data and services components, guiding how agents use data and services within their defined roles and collaborate as a coordinated system.

Typically, an orchestrator agent manages the first layer of reasoning, deciding which agent to activate next and how tasks should flow across the system. This ensures each agent stays within its scope, preventing errors and reducing the risk of unauthorized or inconsistent actions.

Internal reasoning flow in a multi-agent system

To illustrate this more clearly, let’s look at a Q&A chatbot that helps customers compare insurance policies across different banks. To do that fairly and securely, it needs multiple specialized agents:

As you can see, each agent has its own role. The research agent can’t access the payment system, while the cashier agent can’t verify claims.

This separation of logic limits risk and prevents costly mistakes, such as a research agent accidentally making a payment. It also ensures every decision can be traced and explained, reinforcing financial compliance and auditability.

Overall, when data, services, and reasoning are all strictly governed, multiple agents can collaborate safely, intelligently, and compliantly. This ensures accurate outcomes across complex financial services workflows without compromising security or trust.

Your technical architecture is what brings a multi-agent AI system to life. It combines software, large language models (LLMs), and infrastructure layers with the tightly governed data and services we covered earlier to form a complete, functioning system. Together, they enable how agents interact, execute tasks, and scale safely within a regulated environment.

The software layer sets out how agents are built, connected, and orchestrated. It enables communication between sub-agents and ensures they can work together as one coordinated system within their defined roles.

This layer specifies:

To build these connections between agents, you can use modern frameworks like LangChain to create and manage your multi-agent system’s logic. LangChain provides general tools and libraries for connecting models, data sources, and external actions (or “tools”). It includes several sub-frameworks like LangGraph, which is designed for more specific tasks and created specifically for building multi-agent architectures.

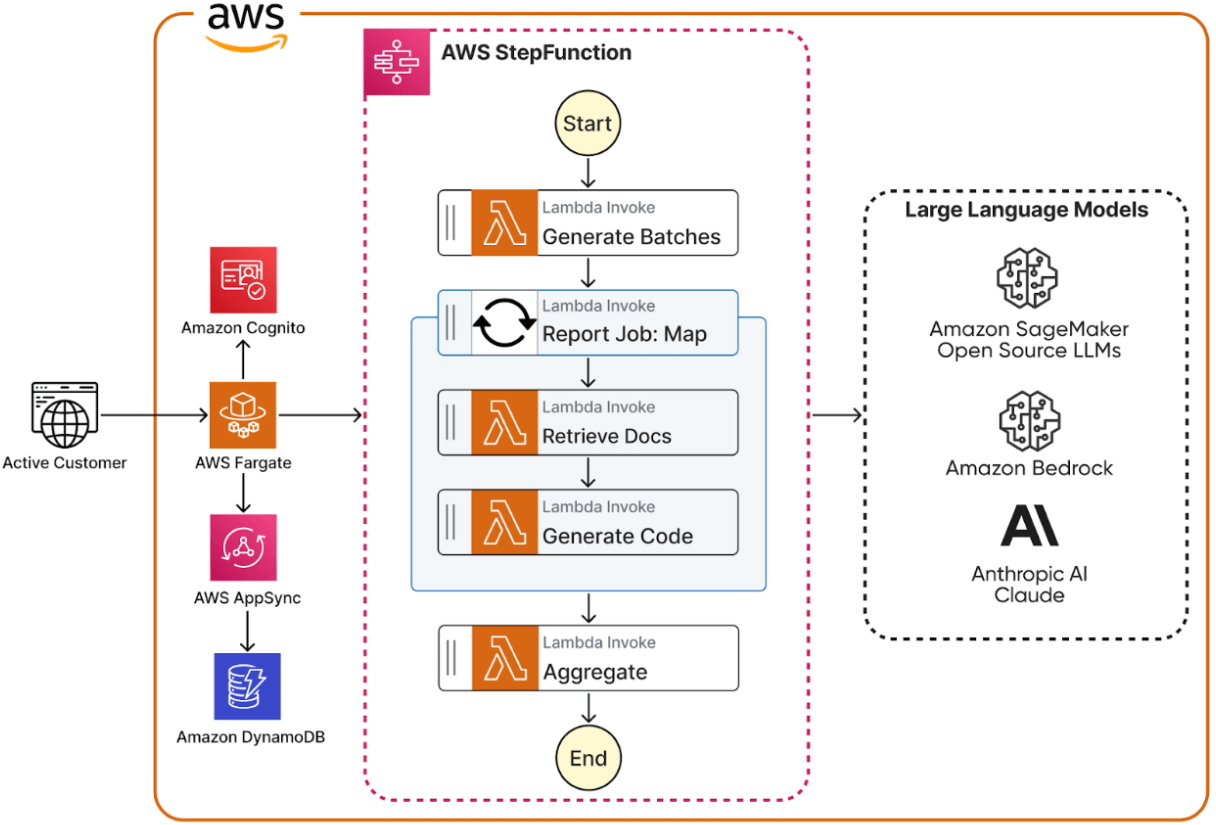

LangGraph structures agents as a graph, where each node represents an agent and each link defines how they interact. This makes it easier to visualize workflows and manage the flow of information across the system. Alternatively, if you’re already running AWS services, you can use AWS-native agent ecosystems, which integrate seamlessly with your existing environment.

Caption: Example of a cloud-based multi-agent AI system using AWS.

In multi-agent solutions, LLMs for finance are fine-tuned to go beyond generating text and predict actions, such as when to conduct a web search, query a database, or trigger another service. They form the reasoning layer, or the “brain”, that interprets user intent and selects the right service. By managing reasoning and linking to the right data and services, LLMs create an intelligent, reliable system capable of handling complex workflows.

Key LLMs include OpenAI, Anthropic, and Gemini, or AWS Bedrock for private cloud deployments. It’s important to consider the regional availability and compliance of each model to ensure your data stays within your geographical boundaries and remains compliant with local regulations. For example:

LLM reasoning and knowledge architecture in multi-agent systems

Since LLMs in a multi-agent system are connected to verified data and deterministic services, they’re far less likely to hallucinate or produce fabricated outputs. Operating within well-governed reasoning boundaries, each agent cross-checks information through structured workflows, keeping responses accurate, auditable, and grounded in real data.

Infrastructure refers to the hardware that runs the software. This is the physical compute layer or the machine and cloud resources that power the LLM tools and agent orchestration, and determines how quickly and reliably your system performs under live conditions.

This layer ensures performance metrics like load management, scalability, availability, and latency. It also enables operational resilience (e.g., uptime) and compliance with regional data regulations (e.g., EU customer data is stored and processed within the EU for GDPR).

You can deploy systems on premise for maximum control or in the cloud for faster scaling. Many financial firms use hybrid approaches, which keep sensitive data and models within private environments while using cloud resources to handle variable demand.

For instance, when user requests or model calls increase in volume, capacity can be expanded through additional compute resources. On the other hand, under lighter volumes, cloud resources can scale down automatically to control costs without compromising performance.

Together, these form a predictable, auditable system that minimizes hallucinations and scales safely across complex FSI workflows.

But designing these multi-agent systems for nondeterministic environments with in-house AI development teams can be challenging. Institutions may lack the technical and architectural expertise to establish secure agent governance and access boundaries. Traditional IT and data teams in finance are often unfamiliar with systems that rely on probabilistic decision-making and dynamic reasoning.

That’s where Neurons Lab can help. In the next section, we’ll show how we help financial institutions build custom multi-agent AI solutions capable of complex, multi-step workflows.

To see how this all comes together in practice, let’s look at ARKEN, our multi-agent AI accelerator built specifically for the complexity of wealth management.

Neurons Lab’s ARKEN is a multi-agent solution accelerator for wealth management that you can customize to fit your specific use case and business KPIs

While off-the-shelf AI solutions like Claude for Finance or Perplexity Finance can surface real-time insights from public sources, they can’t interpret market dynamics or deliver accurate portfolio-level intelligence.

For wealth managers who need accurate, real-time insights to scale their workflows and free up time for more client-facing activities, these limitations are exactly what we address with ARKEN.

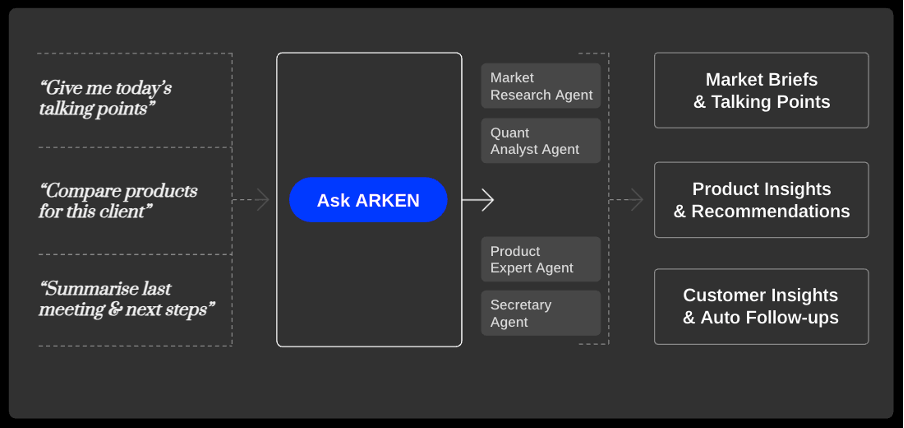

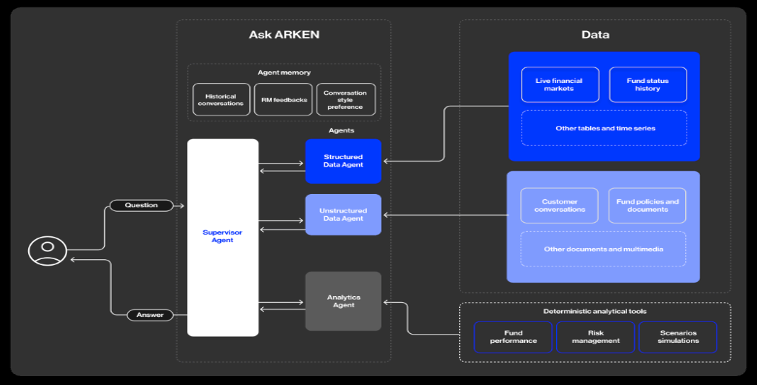

ARKEN’s multi-agent system generates relevant real-time insights that wealth managers can use to scale their workflows

ARKEN sits on top of existing wealth management systems and is powered by a multi-agent framework. Its agents draw on secure public, private and customer data sources, such as live market feeds, product catalogs categorized by risk profile, client portfolios, compliance rules, and knowledge graphs, to mirror real wealth-manager decision patterns.

Each agent then executes actions based on its defined role and action space. For example, when a wealth manager asks a question about a client’s portfolio:

The result is a multi-agent system that enhances decision-making with greater accuracy and personalization. Wealth managers can deliver faster, smarter, and more compliant client advice.

In fact, financial institutions using ARKEN have already seen measurable business outcomes, such as:

It’s through well-governed data, specialized agent systems, and our quantitative finance expertise that results like these are possible.

By designing the tools and services agents rely on for financial analysis, portfolio simulation, and risk management, we ensure outputs that are as accurate and reliable as possible in real market conditions. This was the case for a global asset management firm we partnered with, where we built an AI-driven investing system and designed the quantitative tools behind it.

A global asset management and investment advisory firm wanted to enhance its existing asset allocation approach to improve returns and reduce risk. The firm also aimed to launch a new investment product for both existing and new customers.

Neurons Lab was tasked with developing a custom AI solution to achieve four key goals:

We built a cloud-based research platform that uses macroeconomic and fundamental stock data to better capture risk. The platform used AI-powered portfolio clustering and market structure algorithms to improve risk modelling and make market insights more accurate.

Additionally, we:

Neurons Lab’s scalable cloud architecture running LLMs securely with high availability across multiple zones.

As a result, the solution improved financial performance, sped up strategy development, and strengthened risk management using advanced algorithms, and a secure, scalable AWS setup. Measurable outcomes included:

Designing and deploying a multi-agent system in financial services requires a balance of compliance, performance, and explainability across multiple domains while ensuring your system behaves predictably in both deterministic and nondeterministic environments.

Our deep understanding of both types of systems comes from years of work across insurance, wealth management and banking, allowing you to build AI that acts reliably under fixed rules or fluctuating market conditions.

Neurons Lab is a UK and Singapore-based Agentic AI consultancy with advanced AWS competencies serving financial institutions across North America, Europe, and Asia. As an AI enablement partner, we design, build, and implement agentic AI solutions tailored for mid-to-large BFSIs operating in highly regulated environments, including banks, insurers, and wealth management firms. Trusted by 100+ clients, such as HSBC, Visa, and AXA, we co-create agentic systems that run in production and scale across your organization.

Through a co-development approach and access to a global talent network of over 500 AI engineers, your teams build internal AI capability while gaining continuous access to evolving model frameworks and best practices.

This means you get accurate, traceable, and compliant multi-agent systems built to handle both rule-based and probabilistic reasoning. They scale safely across complex financial workflows and continuously adapt to regulatory and market change.

Here’s why financial services firms partner with us to develop multi-agent systems:

Strong governance defines what each agent can access, how it reasons, and which actions it can take. Neurons Lab helps you establish clear access boundaries, granular permissions, and transparent decision logic across data, services, and reasoning layers.

This structure keeps systems compliant (e.g., with GDPR and regional banking supervision) and reduces the risk of unauthorized actions, while ensuring every decision is auditable and explainable.

With frameworks such as LangChain, LangGraph, AWS Bedrock, and Azure OpenAI, your system connects software, infrastructure, and logic layers into one coordinated architecture.

Our pre-built solution accelerators, tested code, and reusable components reduce time to value and implementation risk, while hybrid-cloud options maintain performance, scalability, and regulatory compliance across markets.

In nondeterministic domains like asset and wealth management, Neurons Lab integrates quantitative-finance tools, including factor risk models and portfolio analytics. This way, your agents can analyze portfolios and model market scenarios accurately and transparently.

Building multi-agent AI systems in financial services is about creating intelligence that operates accurately, compliantly, and at scale. Achieving this requires specialized expertise in aligning governance, architecture and domain expertise. At Neurons Lab, we provide that expertise from start to finish, delivering multi-agent AI systems that work reliably and continue to evolve as market needs and regulations change.

Want to explore how a multi-agent AI system could work for your financial institution? Book a call with us today.

Here is how banks, insurers, and fintechs can budget for AI with scenarios and cost drivers—subscriptions, overages, infrastructure, and ownership

AI agent evaluation framework for financial services: SME-led rubrics, governance, and continuous evals to prevent production failures.

See how wealth management firms can use AI to streamline workflows, boost client engagement, and scale AUM with compliant, tailored solutions

Discover how FSIs can move beyond stalled POCs with custom AI business solutions that meet compliance, scale fast, and deliver measurable outcomes.

See what AI training for executives that goes beyond theory looks like—banking-ready tools, competitive insights, and a 30–90 day roadmap for safe AI scale.